When you watch a movie like Avatar or The Lord of the Rings and see Gollum move with real emotion, or Neytiri blink like a living person, you’re not watching pure CGI. You’re watching motion capture animation-a bridge between human performance and digital worlds. It’s not magic. It’s physics, sensors, and actors doing the hard work of pretending to be something they’re not.

Before motion capture, animators drew every frame by hand. Even with early computer tools, making a character move naturally meant watching real people, then guessing how their joints bent, how their shoulders rolled, how their eyebrows lifted when they were scared. That guesswork often looked stiff. Fake. Unbelievable. Motion capture changed that by recording real movement and putting it directly onto digital models. No guessing. No estimating. Just truth.

How Motion Capture Actually Works

At its core, motion capture is about tracking points on a body. Actors wear tight suits covered in small reflective balls or embedded sensors. Cameras around the stage-sometimes dozens of them-track where those points are in 3D space. Every millisecond, the system records: left elbow at (x=1.2, y=0.8, z=1.5), right knee at (x=0.9, y=0.3, z=1.1). That data gets cleaned up, mapped onto a digital skeleton, and then animated.

It sounds simple. But the real challenge isn’t the hardware. It’s the actor.

Think about Andy Serkis as Gollum. He didn’t just stand in a suit and wiggle. He studied how a creature with no spine would move. He crawled on all fours for months. He learned to breathe like a trapped animal. His facial expressions were captured using tiny cameras pointed at his face, tracking muscle movements down to the twitch of a lip. That performance didn’t just get transferred to a digital model-it became the soul of the character.

Modern systems don’t need reflective balls anymore. Some use infrared cameras that track skin texture. Others use AI to interpret video footage from regular cameras and build movement data without any special suit. But the principle stays the same: real human motion becomes digital motion.

From Film to Games to Virtual Reality

Motion capture didn’t stay in Hollywood. It spread fast.

In video games, studios like Naughty Dog and Rockstar use it to make characters feel alive. In The Last of Us Part I, the way Ellie leans her head when she’s tired, the way Joel rubs his neck after a long day-those weren’t animated by hand. They were captured from real actors rehearsing scenes, crying, laughing, arguing. The result? Players don’t just see characters. They feel like they’re sharing space with them.

Even virtual reality relies on it. Want to meet a digital version of yourself in a metaverse meeting? Motion capture lets your avatar mirror your real gestures-your hand waves, your head tilt, your nervous foot tap. Without it, VR avatars look like robots. With it, they feel human.

It’s not just for humans, either. Animators use motion capture to study how animals move. The dragons in How to Train Your Dragon? Their flight patterns were based on motion-captured birds of prey. The elephants in The Jungle Book? Their walks came from footage of real elephants at zoos, tracked frame by frame.

The Hidden Costs and Limitations

Motion capture isn’t perfect. It’s expensive. A single studio setup with 40 high-speed cameras, a motion capture stage, and a team of technicians can cost over $500,000. That’s before paying actors, editors, or software licenses.

It also doesn’t capture everything. Subtle things-like the way someone’s voice changes when they’re lying, or how their posture shifts when they’re lying about being fine-can’t be tracked by sensors. Those details still need animators to tweak them by hand. That’s why motion capture is rarely used alone. It’s a foundation. Then artists refine it. They add weight. They exaggerate emotion. They make it feel more real than real life.

And sometimes, it fails. In early motion capture films, characters looked eerie-too smooth, too precise. That’s the uncanny valley. When something looks almost human but not quite, it creeps people out. That’s why studios now blend motion capture with traditional animation. They take the realism of the movement and soften the edges just enough to feel believable.

Real Examples You’ve Seen

You’ve probably watched motion capture without realizing it.

- Gollum in The Lord of the Rings - Andy Serkis’s performance, captured with facial markers and body suits, became the gold standard.

- Caesar in the Planet of the Apes reboot trilogy - Serkis again, this time with a full facial capture helmet that recorded every micro-expression.

- Thanos in Avengers: Endgame - Josh Brolin wore a motion capture suit and a helmet with cameras pointing at his eyes. His voice and his facial tension shaped Thanos’s most chilling moments.

- Na’vi in Avatar - The entire cast wore suits with over 100 sensors each. James Cameron’s team recorded their performances underwater, in zero gravity rigs, and on motion platforms to simulate alien movement.

These aren’t just effects. They’re performances. The actors didn’t just lend their looks-they lent their humanity.

What’s Next for Motion Capture?

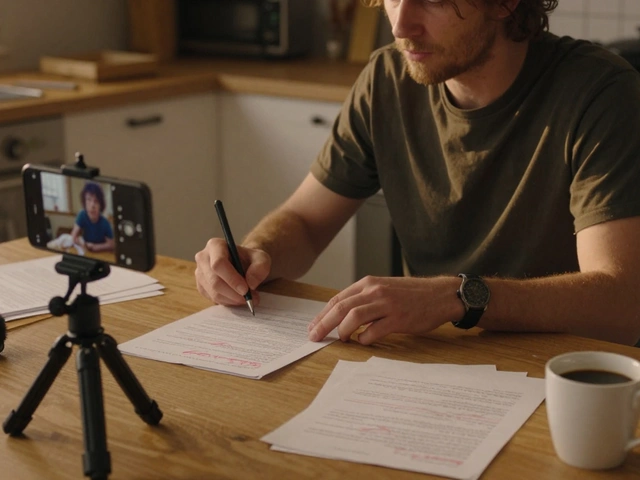

Today, you can do motion capture with a smartphone. Apps like Apple’s ARKit and Android’s Motion Tracking use the front camera to map facial expressions. A teenager in their bedroom can turn themselves into a cartoon character and stream it live. It’s not Hollywood-grade, but it’s proof the tech is democratizing.

Next-generation systems are using machine learning to predict movement from minimal data. A single camera can now estimate a full body pose from just a 2D video. That means smaller studios, indie filmmakers, even students can use motion capture without million-dollar setups.

And AI is starting to fill in the gaps. If an actor’s hand flicks too fast for the sensors to catch, AI can interpolate the missing motion. If a character’s foot slips on a digital floor, AI can adjust the weight distribution to make it look natural. The line between capture and creation is blurring.

But here’s the truth: no matter how smart the software gets, it still needs a human to lead it. The emotion, the timing, the hesitation before a step-that’s not something algorithms understand. That’s why the best motion capture still starts with an actor standing in a room, breathing, thinking, feeling.

Why It Matters

Motion capture animation isn’t just about making cool effects. It’s about preserving performance. It’s about letting real people-actors with scars, voices, fears, and joy-live inside digital characters forever.

Think about it: in traditional animation, a character’s personality is built by artists interpreting a script. In motion capture, the personality comes from someone who lived the moment. That changes everything. The fear in Gollum’s eyes? That wasn’t drawn. That was Andy Serkis remembering his own loneliness.

As digital worlds grow bigger, motion capture becomes the only way to keep them human. Without it, animation risks becoming sterile. Perfect. Lifeless.

With it? We get stories that move us-not because they’re beautifully rendered, but because they’re true.

Is motion capture the same as CGI?

No. CGI (computer-generated imagery) is the process of creating images using software. Motion capture is a method of recording real movement to feed into CGI. You can have CGI without motion capture-like hand-drawn animation or fully simulated physics. But motion capture almost always uses CGI to turn the recorded data into a character.

Do actors wear special suits for motion capture?

Usually, yes. Most professional setups use tight-fitting suits with reflective markers or embedded sensors. But newer systems use camera-based tracking that doesn’t need suits-just regular clothes and good lighting. Some facial capture uses helmets with tiny cameras, not suits at all.

Can motion capture be done at home?

Yes, but with limits. Apps like iPhone’s ARKit or free tools like OpenPose can track basic body and face movements using just a smartphone camera. You won’t get Hollywood quality, but you can create simple animations, test ideas, or make short films. Indie creators are already using this for YouTube content and VR experiences.

Why do some motion capture characters look creepy?

That’s called the uncanny valley. When a digital character moves almost like a human but has slightly off details-like eyes that don’t blink naturally, or skin that’s too smooth-it triggers discomfort. Studios fix this by blending motion capture with hand animation, adding imperfections, or stylizing the character to avoid realism.

Who invented motion capture?

The earliest versions date back to the 1970s, used in medical research to study human movement. The first major film use was in Tron (1982), but it wasn’t until the 1990s that real-time systems like Vicon and Motion Analysis became common. Andy Serkis’s work on Gollum in 2001 is often credited with proving motion capture could carry emotional depth in mainstream cinema.

Comments(10)