When you see Robert De Niro as a 30-year-old in The Irishman or Mark Hamill as Luke Skywalker in The Mandalorian, it’s not makeup, prosthetics, or clever camera angles. It’s de-aging technology - a blend of motion capture, artificial intelligence, and digital rendering that erases decades from an actor’s face in real time. This isn’t science fiction anymore. It’s happening in nearly every major Hollywood film and streaming series released since 2020.

How De-Aging Actually Works

De-aging starts with scanning the actor’s face - not just their current appearance, but their younger self, if available. Studios like Industrial Light & Magic (ILM) and Weta Digital use high-resolution 3D scans taken during filming. These scans capture every wrinkle, dimple, and muscle movement at 120 frames per second. Then, they compare that data to archival footage of the actor from decades ago - think 1970s interviews, old movie stills, or even childhood photos.

The software doesn’t just smooth out skin. It rebuilds the entire facial structure. Cheekbones rise. Jawlines soften. Eyes become more open. The system tracks how the face moved when the actor was younger and applies those same patterns to their current performance. It’s like reverse-engineering time using geometry and physics.

For example, in The Irishman, De Niro’s face was digitally mapped over his real performance. The team used over 2,000 reference images of De Niro from the 1970s. They didn’t just remove wrinkles - they restored the way his mouth curved when he smiled back then, how his eyebrows lifted when he was surprised, even how his skin stretched when he turned his head quickly. The result? A version of De Niro that looks like he did in Taxi Driver, but with the emotional depth of his current performance.

The Tools Behind the Magic

Three core technologies make modern de-aging possible:

- Facial motion capture: Actors wear head-mounted cameras or dot grids that track micro-movements. These aren’t just for animation - they record how the skin deforms under muscle tension, which is critical for realism.

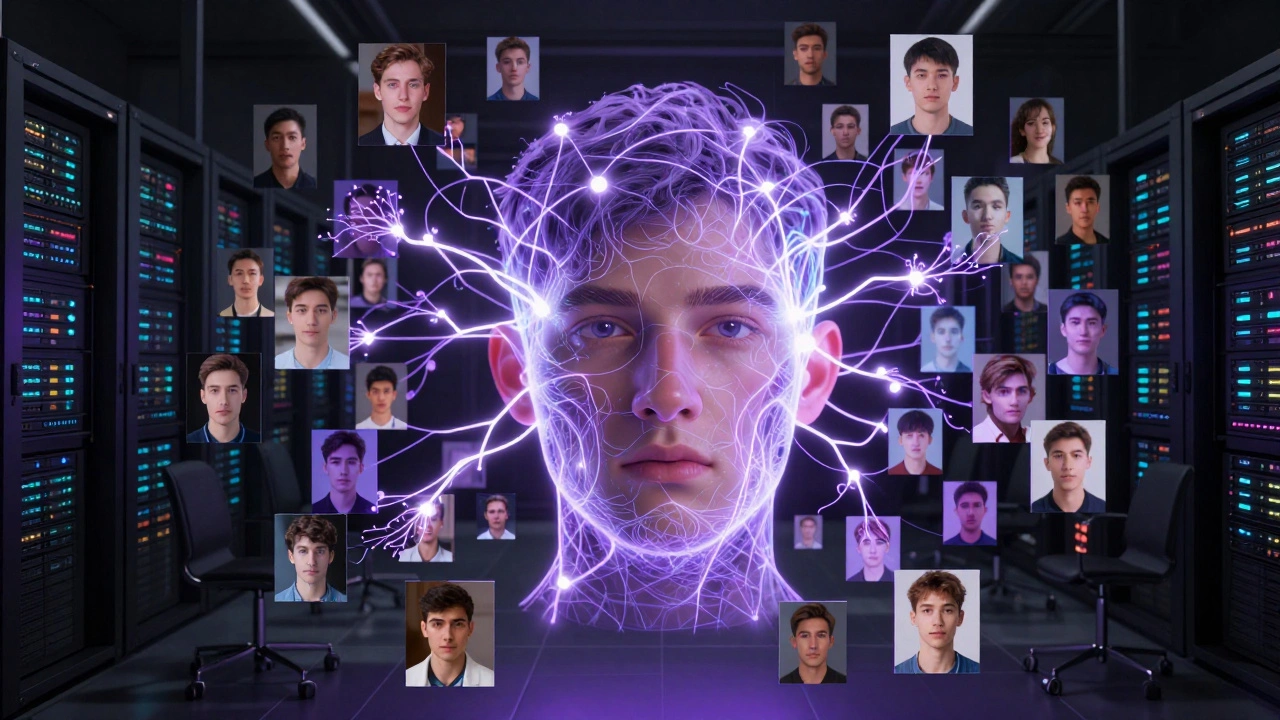

- AI-driven texture synthesis: Neural networks trained on thousands of hours of archival footage learn how skin, pores, and lighting behaved at different ages. These models generate realistic textures that change with lighting and angle - no two frames look artificial.

- Deep learning regression: The software predicts how a face would have looked at age 25 based on its current state. It doesn’t guess - it calculates using statistical models built from real human aging patterns across thousands of subjects.

Companies like Digital Domain and Framestore have proprietary systems that can de-age faces with 98% accuracy in controlled lighting. But under harsh sunlight or fast motion? That’s where things get tricky.

Why It’s Not Just About Looks

De-aging fails when it ignores behavior. A 30-year-old doesn’t just look different - they move differently. Their posture, blink rate, vocal pitch, even how they hold a cigarette changes over time.

In The Mandalorian, Mark Hamill’s younger Luke Skywalker was recreated using a combination of his 1983 performance data and AI-generated muscle memory. The team didn’t just copy his face - they analyzed how he stood, how he shifted weight, how his voice cracked slightly when he spoke softly. That’s why the scene feels authentic. It’s not a mask. It’s a performance.

Some directors refuse to use de-aging for this reason. Christopher Nolan said in a 2023 interview that he’d rather cast a younger actor than risk a “uncanny valley” moment where the face looks real but the movement doesn’t. He’s not alone. Many cinematographers still prefer practical effects - aging makeup, stunt doubles, or reshoots - when the budget allows.

The Ethical Debate

De-aging isn’t just a technical challenge - it’s a moral one. In 2024, the Screen Actors Guild (SAG-AFTRA) added new clauses to contracts requiring studios to get written consent before digitally altering an actor’s appearance. This came after a controversial case where a late actor’s likeness was used in a commercial without family approval.

Some actors embrace it. Angela Bassett used de-aging in Black Panther: Wakanda Forever to play a younger version of herself in flashbacks. She said it helped her connect with her character’s past in a way makeup never could. Others, like Tom Hanks, have publicly warned that digital resurrection could erase the natural arc of an actor’s career.

There’s also the issue of consent for deceased performers. In 2025, Disney used AI to recreate a young Carrie Fisher as Princess Leia in a posthumous cameo. The family approved it. But not all estates are so willing. Legal battles over digital likenesses are now common in Hollywood courts.

What’s Next for De-Aging

By 2026, de-aging is moving beyond film. TV shows like Star Trek: Strange New Worlds use it to bring back characters from original series without recasting. Video games are catching up - Red Dead Redemption 2 used similar tech to age John Marston across decades.

Next-gen systems are starting to work in real time. On-set de-aging rigs now allow directors to see a younger version of an actor live during filming. No more waiting weeks for render farms to finish. This changes how scenes are shot - lighting, camera angles, even blocking are adjusted on the fly.

And it’s getting cheaper. In 2023, a full de-aging sequence cost $5 million. Today, indie studios can do it for under $500,000 using open-source tools like FaceSwap and DeepFace. That means we’ll soon see de-aged performances in indie films, YouTube shorts, and even TikTok content.

The Real Cost of Looking Younger

There’s a hidden price tag. De-aging requires massive computing power. A single minute of de-aged footage can take 400 hours to render on a high-end GPU cluster. That’s more energy than a small town uses in a day.

And it’s not just environmental. It’s creative. When studios rely too much on digital fixes, they stop casting younger actors. Roles meant for 20-somethings go to 50-year-olds who can be digitally de-aged - squeezing out newcomers. Some film schools report a 30% drop in auditions for young leads since 2022.

Worse, audiences are starting to notice. A 2025 study by the University of Southern California found that 62% of viewers under 30 could tell when an actor had been digitally de-aged - not because it looked fake, but because it felt off. “It’s like watching someone speak in a language they learned from a textbook,” said one respondent. “It’s correct, but it’s missing the soul.”

When De-Aging Works Best

De-aging shines in three situations:

- Flashbacks: When a story needs to show a character’s past without switching actors. Think Avengers: Endgame’s 2012 Loki scene.

- Legacy roles: Bringing back iconic characters for emotional payoffs - like Peter Parker in Spider-Man: No Way Home.

- Actor availability: When the original actor is unavailable, but their likeness is essential - as in Fast & Furious 9’s use of Paul Walker’s digital face.

It fails when used to make an actor look younger just because they’re “too old” for a role. That’s not storytelling - it’s vanity. And audiences see right through it.

Final Thought

De-aging isn’t about cheating time. It’s about honoring it. When used right, it lets actors tell stories they couldn’t otherwise. It lets us see the past come alive - not as a museum piece, but as a living, breathing moment.

But it’s a tool, not a crutch. The best de-aged scenes don’t make you gasp at the technology. They make you forget it was ever there.

Is de-aging technology legal?

Yes, but only with proper consent. Since 2024, SAG-AFTRA requires written permission from actors or their estates before using digital de-aging. Using someone’s likeness without consent can lead to lawsuits, especially if the person is deceased. Studios now include digital likeness clauses in contracts.

Can de-aging be done on low-budget films?

Absolutely. Open-source tools like FaceSwap, DeepFace, and DAIN can de-age faces on consumer-grade GPUs. Indie filmmakers have used these to create convincing results for under $10,000. The quality isn’t Hollywood-level, but for short films or web series, it’s more than enough.

Does de-aging replace makeup and prosthetics?

Not entirely. Makeup still wins for subtle aging - wrinkles, gray hair, skin texture under natural light. De-aging is best for full-face transformations or when the actor needs to look decades younger. Many productions now combine both: makeup for realism, digital tools for dramatic age shifts.

How accurate is AI in predicting how someone looked at 25?

Very accurate - if there’s enough reference material. AI learns from hundreds of images of the same person at different ages. Without those, it guesses based on general human aging patterns, which can lead to unnatural results. That’s why studios always try to get archival footage - it’s the key to realism.

Why do some de-aged scenes feel creepy?

It’s called the uncanny valley. When the face looks young but the movement, voice, or lighting doesn’t match, your brain senses something’s wrong. Even tiny delays in blink timing or unnatural skin shine can trigger discomfort. The best de-aged scenes match not just appearance, but behavior - how the person moved, spoke, and reacted emotionally.

Is de-aging used in TV shows too?

Yes, and it’s growing fast. Shows like Star Trek: Strange New Worlds, The Boys, and Obi-Wan Kenobi use de-aging for flashbacks and legacy characters. TV budgets are tighter, so studios use faster, AI-driven pipelines that can render de-aged scenes in hours instead of weeks.

Comments(6)