Ever spent 20 minutes scrolling through movie reviews just to still not know if a film is worth watching? You’re not alone. Two names dominate the conversation: Rotten Tomatoes and Metacritic. They look similar - both show scores, both claim to summarize critics - but they work in completely different ways. And that difference changes how you decide what to watch.

How Rotten Tomatoes Splits Critics Into Two Boxes

Rotten Tomatoes doesn’t average scores. It counts yes-or-no votes. If a critic gives a movie a 6/10 or higher, it’s labeled "Fresh." Anything below is "Rotten." The percentage you see? That’s just the percentage of critics who said "yes." A 90% rating doesn’t mean critics loved it - it just means 9 out of 10 gave it a passing grade. One critic might give it a 6.1, another a 10. Both count the same.

This system works great for quick decisions. If a movie hits 95% Fresh, you assume it’s broadly liked. But it hides nuance. A movie with 100 reviews averaging 6.8/10 can still be 92% Fresh. That’s not a masterpiece - it’s just barely acceptable to most critics. Yet the score makes it look like a triumph.

And then there’s the "Certified Fresh" badge. To earn it, a film needs 75% Fresh, at least five reviews from top critics, and a minimum of 80 reviews total. It sounds official. But it’s just a marketing label. It doesn’t measure quality - it measures volume and consistency.

Metacritic Weighs Every Review Like a Scale

Metacritic takes a different approach. It doesn’t just count yes or no. It converts every review into a numeric score - from 0 to 100 - then calculates a weighted average. A 7/10 becomes 70. A 9/10 becomes 90. A 3/5 becomes 60. It then adjusts the score based on the critic’s influence. A review from The New York Times counts more than a blog post from a hobbyist.

This means a movie with a 75 on Metacritic has been consistently rated as "good" - not just barely acceptable. A 75 here means critics mostly agreed it was solid. A 75 on Rotten Tomatoes might mean half the critics gave it 10/10 and the other half gave it 1/10. The average would still be 5.5, but Rotten Tomatoes would call it 50% Fresh. Metacritic shows you the real middle ground.

Metacritic also separates critics into tiers: Major Publications, Major Blogs, and User Reviews. The main score you see is based only on professional critics. User scores are shown separately. That keeps the professional rating clean. Rotten Tomatoes mixes them together.

Why the Same Movie Gets Different Scores

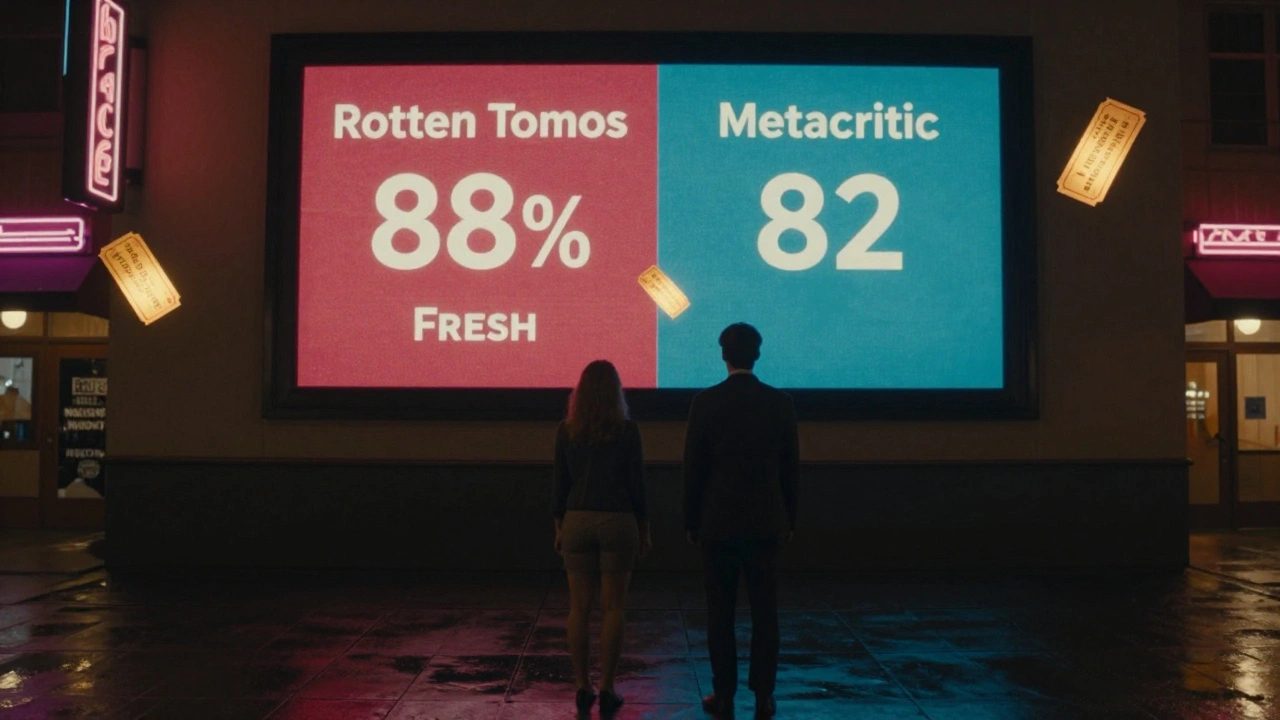

Take Barbie (2023). Rotten Tomatoes gave it 88% Fresh. Metacritic gave it 82. Why the gap?

Rotten Tomatoes counted 580 critics. 510 gave it a passing grade. That’s 88%. But among those 510, many gave it scores like 6.5 or 7. The average critic score? Around 7.3/10. Metacritic converted all those into numbers, weighted the big-name critics more, and landed at 82 - which matches the actual average.

Now look at Oppenheimer. Rotten Tomatoes: 93% Fresh. Metacritic: 87. Again, the difference isn’t about quality - it’s about method. Rotten Tomatoes doesn’t care if the 93% includes a lot of 7s. Metacritic does. It sees the 87 as a more honest reflection of what critics actually thought.

There’s also John Wick: Chapter 4. Rotten Tomatoes: 86% Fresh. Metacritic: 79. The movie was widely praised, but not universally loved. Metacritic captured that tension. Rotten Tomatoes smoothed it over.

What the Scores Don’t Tell You

Both sites have blind spots. Rotten Tomatoes ignores tone. A 10/10 review calling a film "a shallow, overhyped mess" still counts as Fresh. Metacritic doesn’t always catch new voices. Indie critics, YouTube reviewers, or regional journalists often don’t make the cut - even if their insights are sharp.

Neither site tracks audience sentiment well. Rotten Tomatoes Audience Score is just a percentage of users who rated 3.5/5 or higher. Metacritic’s User Score is an average - but it’s swamped by casual viewers who rate on impulse. A movie like The Marvels got a 78% on Rotten Tomatoes from critics but a 45% from audiences. That gap isn’t about quality - it’s about expectations. Critics expected a superhero film. Audiences expected a masterpiece.

Also, both sites are biased toward big releases. Independent films with limited reviews get buried. A 90% Fresh rating on Rotten Tomatoes for a small indie film might be based on only 12 reviews. That’s not a consensus - it’s a fluke.

When to Trust Which Score

Use Rotten Tomatoes when you want to know if a movie is broadly liked. If it’s 90%+ Fresh, it’s probably worth a watch. If it’s under 60%, it’s likely not for you. It’s great for filtering out the obvious duds.

Use Metacritic when you want to know how good it actually is. If a movie scores 80+, it’s been consistently praised. A 70-79? Solid, but not exceptional. Below 60? Probably not worth your time. It’s better for judging quality, not popularity.

For blockbusters, check both. If Rotten Tomatoes says 90% Fresh but Metacritic says 68, something’s off. Maybe the critics are divided. Look at the reviews. Don’t just trust the number.

For indie films, dig deeper. A 70% on Rotten Tomatoes might mean 8 reviews - 6 Fresh, 2 Rotten. That’s not enough to go on. Check Metacritic. If it’s not there, look at Letterboxd or IMDb user reviews. Don’t rely on one source.

How Real Moviegoers Use Them

Most people don’t know how these sites work. They see "95%" and assume it’s perfect. That’s why bad movies with high scores still get sold out theaters. And why great, quiet films get ignored because they’re at 75%.

People who care about film - critics, filmmakers, cinephiles - use both. They know Rotten Tomatoes tells you if a movie is popular. Metacritic tells you if it’s good. They cross-check. They read the reviews. They don’t just click "Watch Now" because of a number.

And here’s the truth: no score tells you if you’ll like it. Your taste matters more than any algorithm. But these tools help you filter the noise. They save you from wasting time on movies that are either too bad or too boring.

What’s Missing From Both Sites

Neither site tracks how a movie ages. A film with a 70 today might be seen as a classic in 10 years. Neither tracks diversity of opinion. A 90% Fresh rating doesn’t tell you if critics from different backgrounds agreed - or if it was just a homogenous group of reviewers.

They also ignore context. A 75 for a $200 million blockbuster is a win. A 75 for a $500,000 indie drama is a triumph. The scores don’t adjust for budget, ambition, or cultural impact.

And they don’t care about release timing. A movie dropped in January gets fewer reviews than one released in December. That skews the numbers. A film with 50 reviews in January might be at 80%. The same film in December, with 200 reviews, might drop to 75% - not because it’s worse, but because more critics saw it.

That’s why the most reliable way to judge a film? Watch it yourself. But if you’re deciding whether to spend two hours on something, Rotten Tomatoes and Metacritic are the best tools we’ve got - if you know how to read them.

Is Rotten Tomatoes or Metacritic more accurate?

Metacritic is more accurate for measuring overall critical sentiment because it uses a weighted average of scores. Rotten Tomatoes only shows whether critics gave a movie a passing grade, not how good it was. If you want to know if a film is truly well-made, Metacritic’s score is closer to the truth.

Why do some movies have high Rotten Tomatoes scores but low Metacritic scores?

This happens when a movie gets a lot of mediocre reviews - just barely above the 6/10 cutoff. Rotten Tomatoes counts all of them as "Fresh," inflating the percentage. Metacritic averages those scores and brings the number down. A movie with 70% Fresh and a Metacritic score of 65 means most critics thought it was okay, not great.

Can a movie be good even with a low Metacritic score?

Absolutely. Metacritic reflects what professional critics thought - not what audiences felt. Some films, like Blade Runner 2049 (Metacritic: 81) or Manchester by the Sea (Metacritic: 87), were critically praised but underperformed at the box office because they didn’t appeal to mainstream tastes. A low score doesn’t mean bad - it might mean niche, slow, or challenging.

Should I trust user reviews on these sites?

Not as a primary guide. User scores are often skewed by fans, trolls, or people who haven’t even seen the movie. Use them as a secondary check - if 80% of users hated a movie that critics loved, maybe something’s wrong. But don’t let a 3.5/10 user score kill a movie you’re curious about.

Do studios manipulate Rotten Tomatoes scores?

Yes, sometimes. Studios pay for early screenings to get favorable reviews from critics who are likely to give high scores. They also promote positive reviews and suppress negative ones. That’s why early Rotten Tomatoes scores often look inflated - they’re based on a biased sample. Wait until more reviews come in before trusting the number.

Final Takeaway: Use Both, But Know the Difference

Rotten Tomatoes is your quick filter. Metacritic is your quality checker. One tells you if people liked it. The other tells you how much they liked it. Neither is perfect. But together, they give you a real picture.

Next time you’re deciding what to watch, check both. If Rotten Tomatoes says 90% Fresh and Metacritic says 85 - go for it. If Rotten Tomatoes says 70% Fresh but Metacritic says 60 - maybe skip it. If Rotten Tomatoes says 50% Fresh and Metacritic says 45 - don’t waste your time.

And if you still can’t decide? Watch the trailer. Read one review from a critic you trust. Then make your own call. The numbers help. But your taste? That’s the only score that really matters.

Comments(10)